A few weeks ago, we had a chance meeting with the CIO of one big company in the Asia region. He shared that his team is having some performance issues with the existing data warehouse system and it was taking very long time for the data to be ready for end users every day and asked for our help.

We took this opportunity to address his issues and trying to "Sharpen the Saw" around our large volume Data Platform activities, we built a very quick PoC to demonstrate how the Azure Platform (specifically SQL Data Warehouse and Analysis Services) would resolve their pain points, and also align with the best practices in term of designing data warehouse model, applying ETL framework to control the data quality & capture audit information and some analytical stories in their industry.

As this is not an atypical use case, I thought it would be useful to share our journey and findings. Below is what we proposed and helped them to achieve.

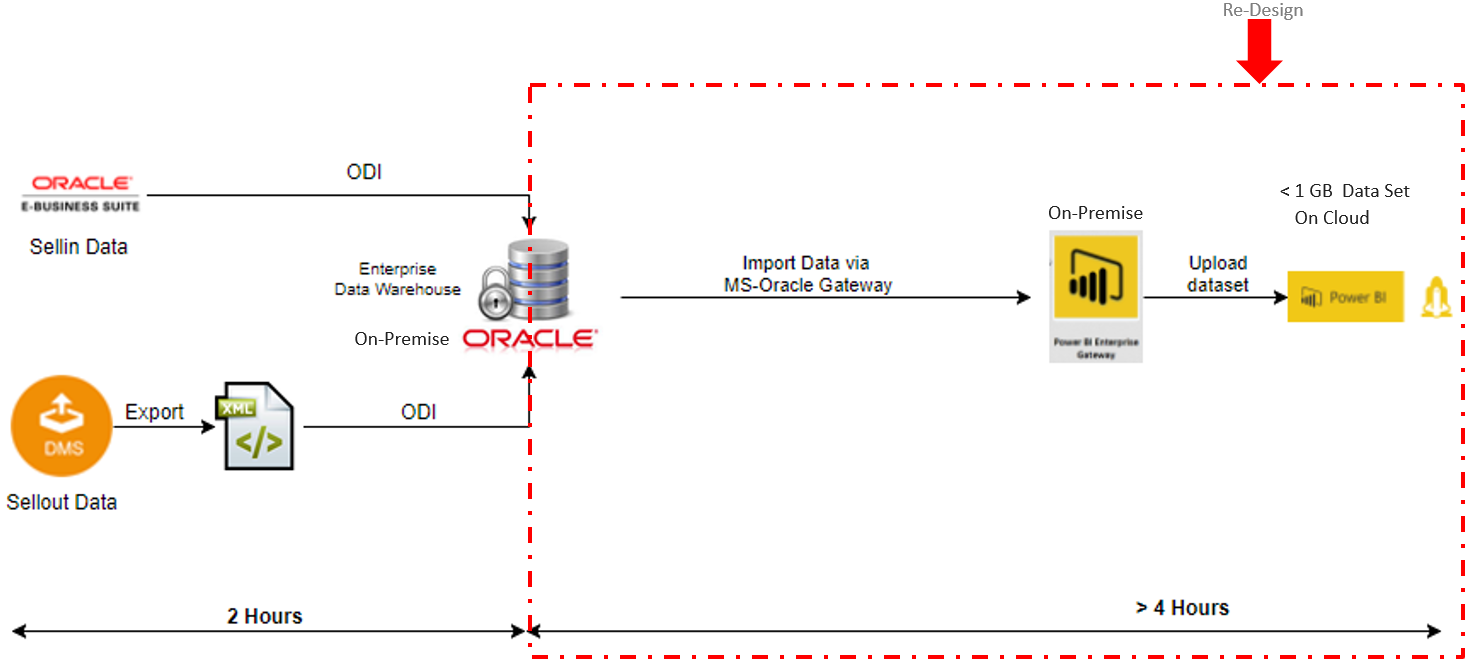

Before Architecture

Customer is using Power BI service to connect directly to their DW system sit on premises and the data is imported from DW to Power BI every day using Power BI gateway. The data volume of the existing DW system is around 100 GB and customer has a road map to extend the business requirement to different subject areas and expected data size to be 10 TB within the next 3 years.

Problem

With the before architecture, customer is facing some issues which are listed below.

- The limited dataset refreshment (1GB) and time consumption for data available in Power BI Cloud.

- Power BI Data set take 4 hours to complete.

- Unable to meet new business requirements due to exceeding Power BI 1GB limit.

- Current workaround of splitting the few PBI files, but new issues on some existing reports and overall timing is more than 4 hours.

Customer Expectation

Below is what customer expected as outcome of the project.

- Propose the good Data Warehouse (DW) and Business Intelligence (BI) solution architecture with the best resources sizing, costing plan for the propose architecture.

- Show the real data quality control cases with dimension/hierarchy and transactional data.

- The proof of data availability in the shorted time, before 7AM daily.

- Minimum data period is 15 months.

- Interaction time on dashboards/reports is less than 10 seconds.

- Dashboards/Reports loading time is less than 10 seconds.

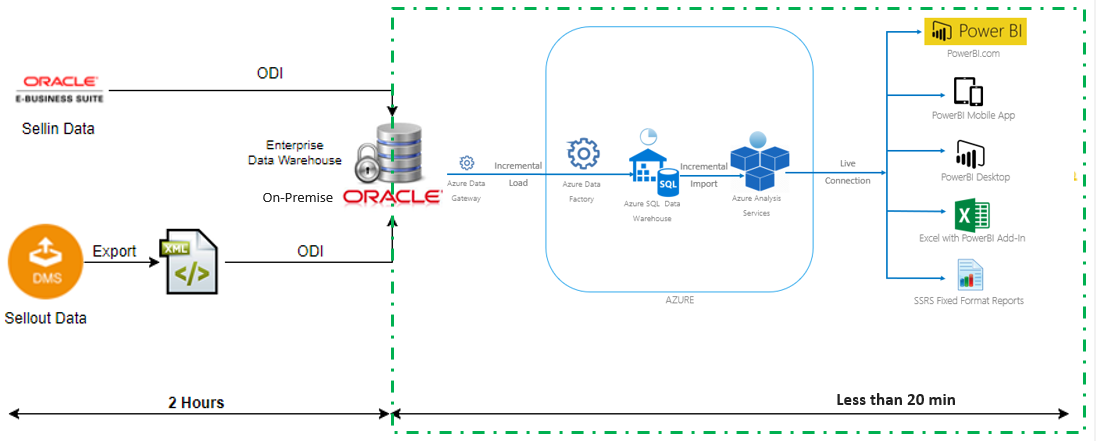

Proposed Architecture

While in the long run we want the customer to migrate their ETL processing to the cloud, we wanted to have some quick wins. With that in mind we proposed the architecture below which will help them to resolve all the issues and achieve good performance including data refresh and query performance in a very short period.

The data will be copied incrementally every day from on premises Data Warehouse to Azure SQL Data Warehouse using Azure Data Factory with Poly-Base feature. After that, it will be incrementally imported to Azure Analysis which is the in-memory engine and the centralized model.

Power BI connects to Azure Analysis Services (in-memory engine) using Live Connection mode.

Why Proposed Architecture

|

Approach |

Azure SQL Data Warehouse and Analysis Services |

|

Cloud Approach |

PaaS - Fully Managed |

|

Data Architecture |

MPP + In Memory Cache |

|

Scalability |

SQL Warehouse - Unlimited Analysis Services - Multiple instances |

|

Data Model |

Multiple AS models by Subject Area |

|

Uptime |

SQL Warehouse - During batch time Analysis Services - Always On |

|

Focus |

Performance (Batch & Runtime) |

|

Expected Monthly Consumption |

USD 1-3k |

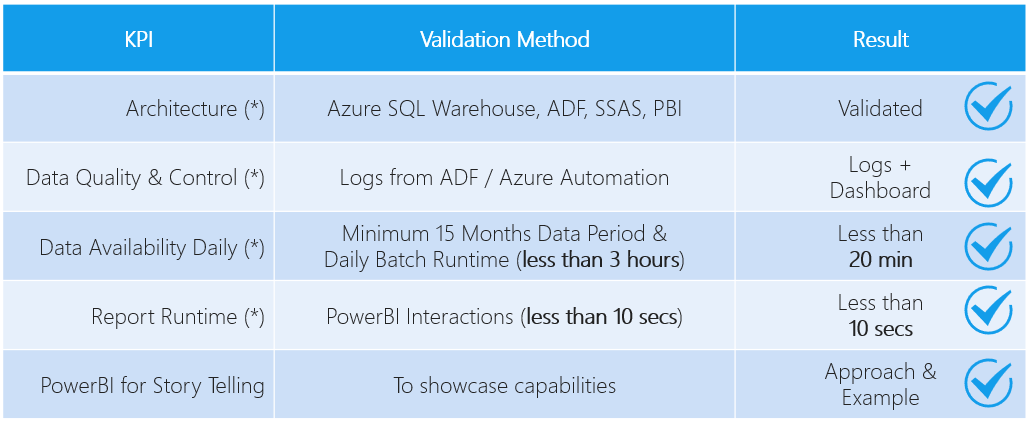

Results

Below are the results we helped the customer achieve in a matter of weeks. More than that, we also helped them finalize their road map to move their existing data warehousing system to cloud-based with Azure SQL DW.

Conclusion

With Azure SQL Data Warehouse, the system can quickly run complex queries across petabytes of data. Integrating with Azure Data Factory with Poly-Base and using power of Massively Parallel Processing (MPP) you can build high performance data pipelines to meet any kind of analytics on the cloud.

This combined with Azure Analysis Services, a BI professional can create a semantic model over the raw data and share it with business users so that all they need to do is connect to the model from any BI tool and immediately explore the data and gain insights. Azure Analysis Services uses a highly optimized in-memory engine to provide responses to user queries at the speed of thought.

Reach out to us if you want to know more about this or other projects we have sucessfully migrated to Azure.